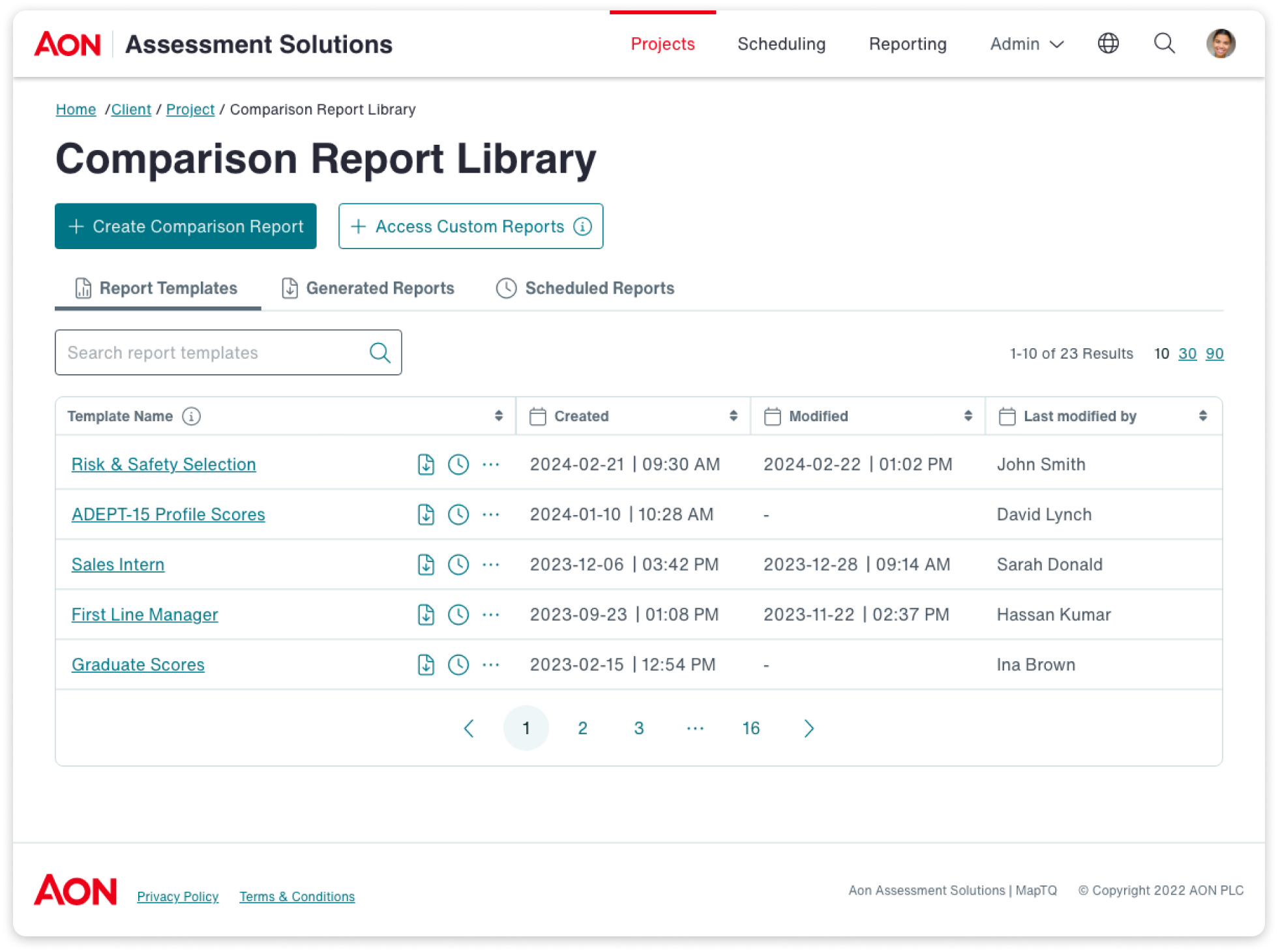

Aon Assessment Solutions

Comparison Report Builder

Existing Issues

When it comes to configuring these reports, clients are provided with 3 reporting solutions:

- A standard report, that cover basic assessment scores/results.

- A configurable report, that contains basic scoring as well as additional scoring requests that are setup manually by the operations team.

- A custom report, that contains unique scoring requests, such as scoring combinations, that are set up on a case by case basis by the IT team.

As client requests get more complex, the IT team are required to build these requests manually as the current setup process has limited customisation. Currently the creation of these reports are using 11% of IT resource, an amount we wanted to reduce.

Additional pain points that users encounter includes the length of time it takes to create one report. There was frustration with the amount of repetitive steps they had to take in order to create the same or similar reports. In the case of generating the same report on different days, the user had to go through the report setup process twice, remembering all the same settings that were selected in order to generate this report.

Product Goals

Alongside addressing the current pain points of the system, we are designing to accommodate the migration of another system’s clients and closing any feature gap requirements to successfully achieve this.

With all of this in mind, our goals for this product were as follows:

- Improve the overall UX process for creating a report, with emphasis on reducing the amount of time it takes to create.

- Reduce the workload on the IT team by adding functionality into the report builder that can accommodate use cases that currently require manual implementation.

- Include scores that are highly requested by clients that are not part of a standard report to reduce workload on the operations team who are needed to create these as custom scores.

- Close reporting feature gaps to allow a seamless transition of clients from another system into this system.

User Research

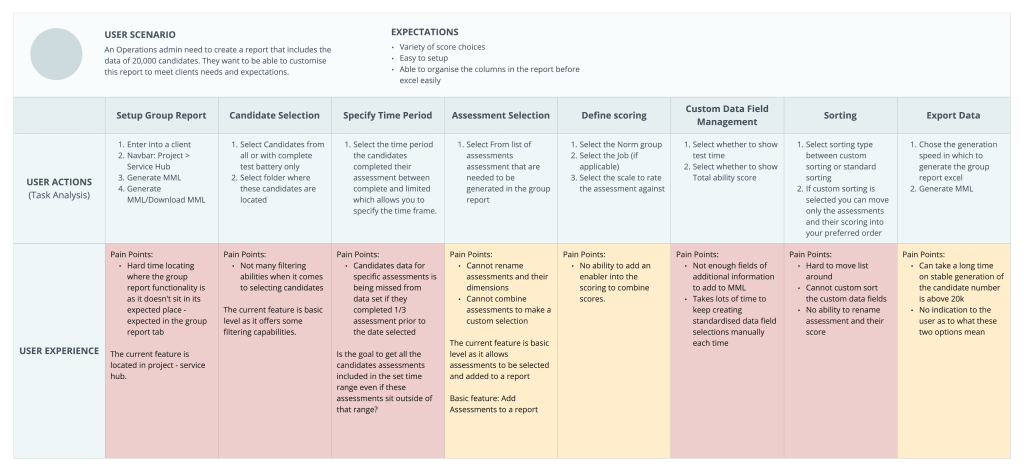

Before beginning with any design work we needed to establish the following things:

- What current selections do the users have in the current report builder?

- What selections are users looking for when creating their report that are currently missing?

- What do users like/dislike about the current system?

- What scores are highly requested by clients that are currently having to be setup manually?

- What customisations are clients asking for that are having to be manually implemented by the IT team?

- Are there any current system limitations we need to adhere by?

- What are the feature gaps we need to fill to accommodate a successful migration of clients from another system?

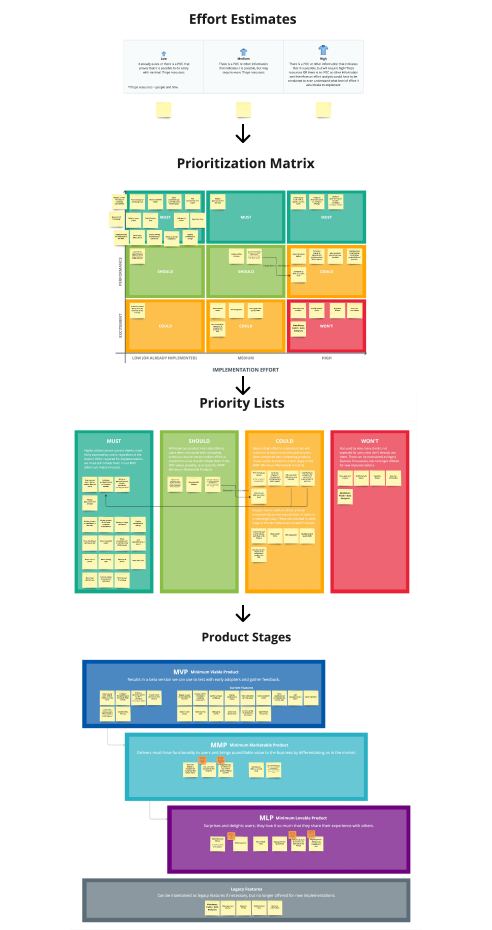

With our answers to these questions translated into a potential feature list, we created a feature prioritisation matrix to determine basic, performance and excitement features, and taking into consideration development times when mapping out our product release stages. We came up with the agreed feature list for the MVP based off our research.

However, due to system limitations, certain features that we would have considered for the MVP became out of scope.

Technical Research

Following on from our refined feature list, due to the complex nature of scoring and reporting for assessments, an in-depth technical understanding was required to understand the product and provide the best design solutions.

For context, each assessment has the ability to provide many scores, from employability skill scores, to the quality of the data provided, each with complex calculations behind them. Scores can be provided and customised based on a client request.

To comprehend such complex scoring logics to provide a design solution that is intuitive for users to understand the output of each selection made in the builder, a in-depth document was created to understand all scores and their associated assessments.

Design Decisions

Once an overall understand of scoring and reporting had been gathered, with enough knowledge to improve and change how scores are selected alongside assessments we began applying UX best practise, taking on key feedback from stakeholders.

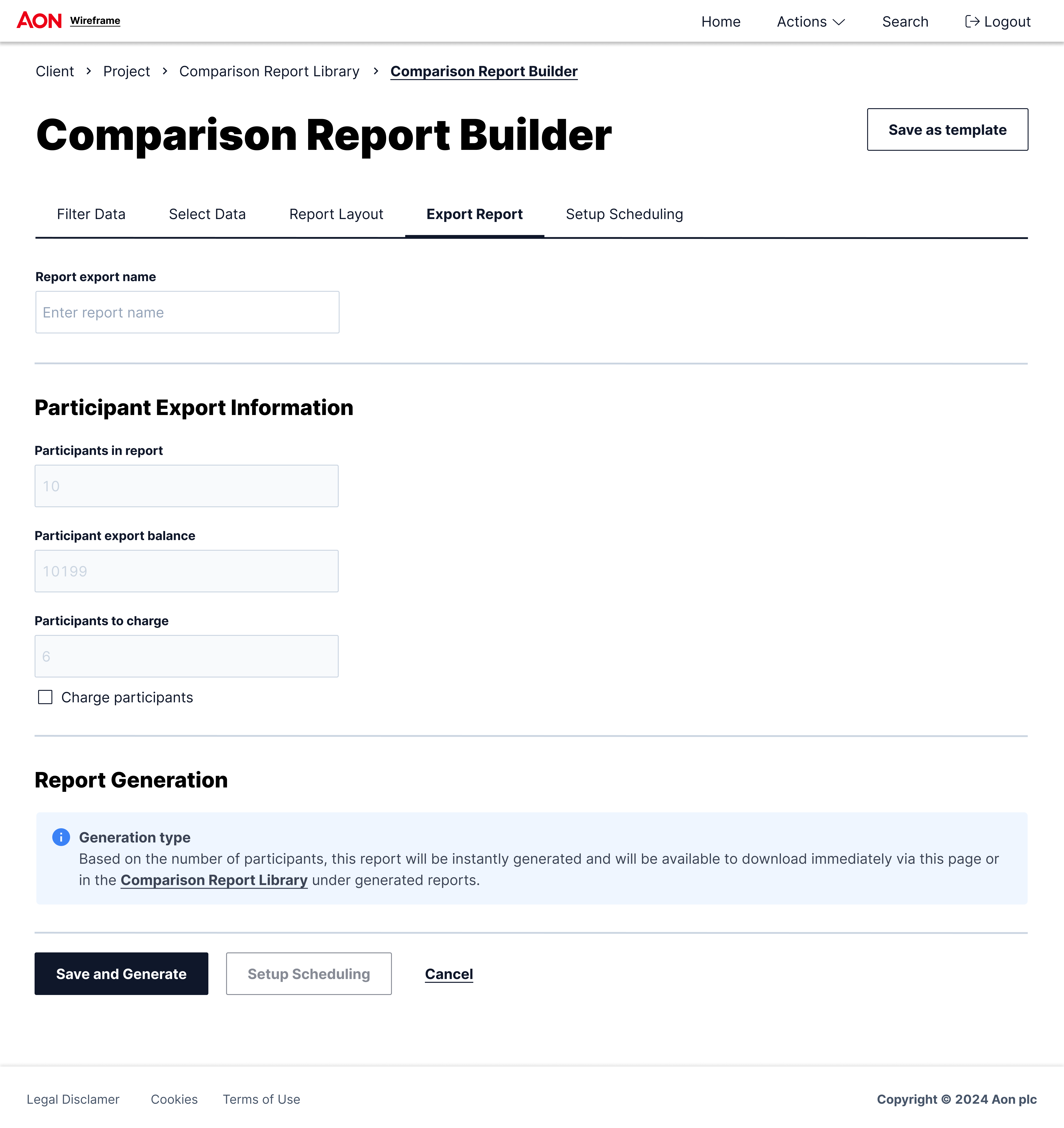

For our MVP, our deliverables were mid-fidelity wireframes due to the large implementation effort needed from development to deliver the new experience as well as interfaces innkeeping with our new design system, a business decision was made to provide designs in wireframes only. This being said, we were still delivering our designs with all necessary use cases for both happy and sad paths for development to use and reference.

Our first steps in our design process was to map out a holistic userflow to show the journey the user would take when creating a report.

Persona and Userflow

We established our persona and outlined the users expectations and requirements for the product. From here we mapped out the basis for journey of creating a report.

Ideation

With our user flow mapped out, we began wireframing out the key screens. Focusing on UX improvements first to show the overall structure of the builder before moving onto introducing more complex, smaller features.

Iteration happened a lot at this stage as many of our ideas were out of the scope of the IT team’s capabilities. Although as the project progressed, there was a push to deliver our ‘better’ UX even if it would take longer to implement by the IT team.

Testing

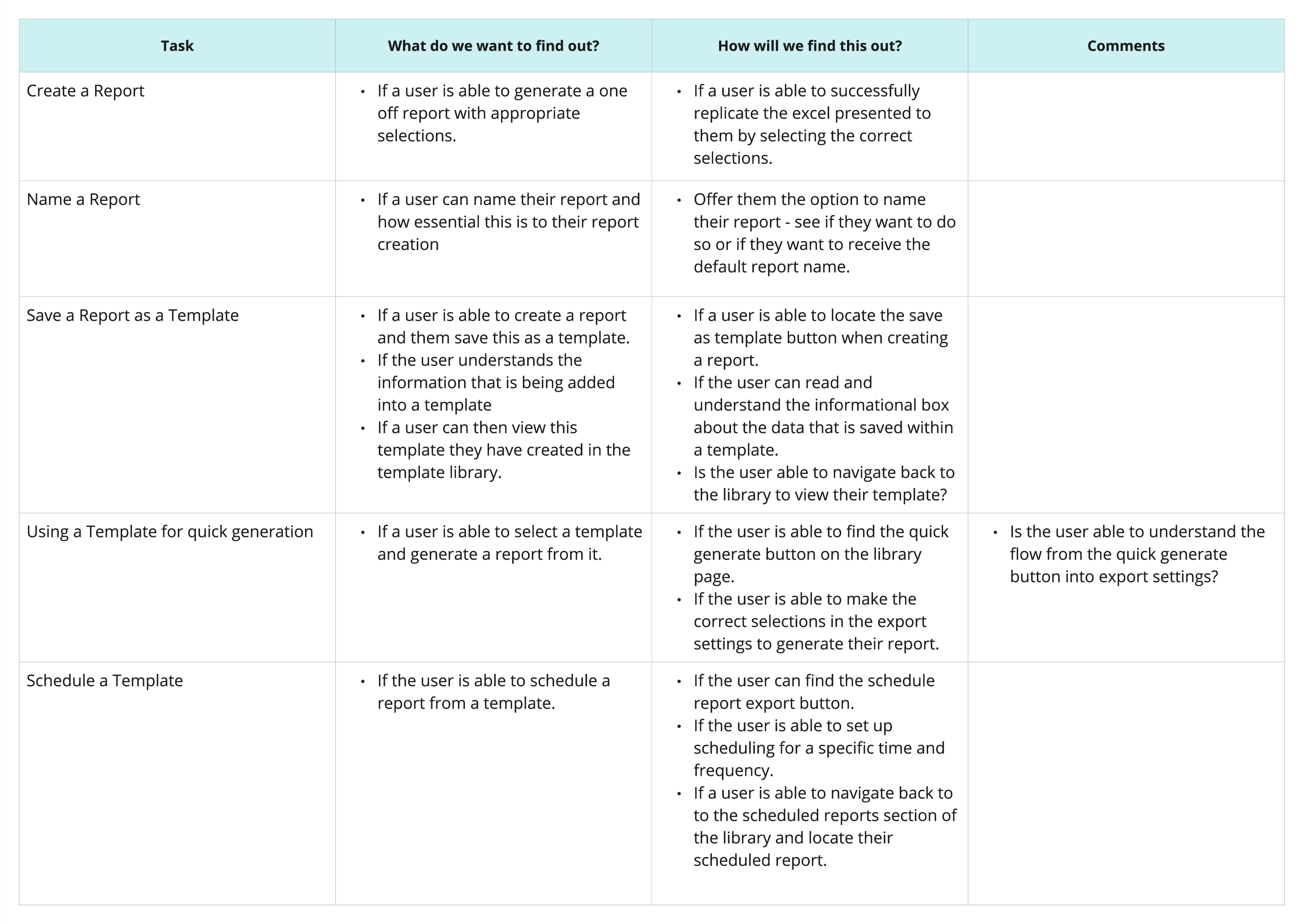

We wanted to get our users initial thoughts firstly on one of our potential designs through a series of moderated design review sessions with internal admins.

We kept our scenarios basic, asking admins to make selections to achieve a report output that was provided to them.

Test Plan

Firstly, we devised a test plan to understand our testing goals and map out our scenarios and tasks.

Results

We found that the majority of users were able to complete the tasks assigned. However, we still had refinements to make based on feedback. Some of these refinements included:

- Addressing the wording of certain selections and headings

- Provide more of an explanation on certain scoring types for clarity

Next Steps

As this product progresses, we look to add additional functionality that aligns with our admins expectations and continue to deliver and test these accordingly.

We hope to deliver fully-fledged prototypes in the coming future and continue to improve system design and functionality.